Idea-2-3D

Idea-2-3D is an automatic 3D model design and generation system based on collaborative LMM agents, supporting multimodal input, and providing users with better visua...

Tags:AI Design3D design Idea23D iterative refinement multimodal models text-to-3D

Idea23D is a multimodal iterative self-refinement system designed to improve how text-to-image models can be used for creating 3D models. It uses collaborative multimodal models that handle both text and image inputs to generate high-quality 3D designs. The system focuses on refining these models through multiple iterations, improving both the visual output and the system’s understanding of complex prompts.

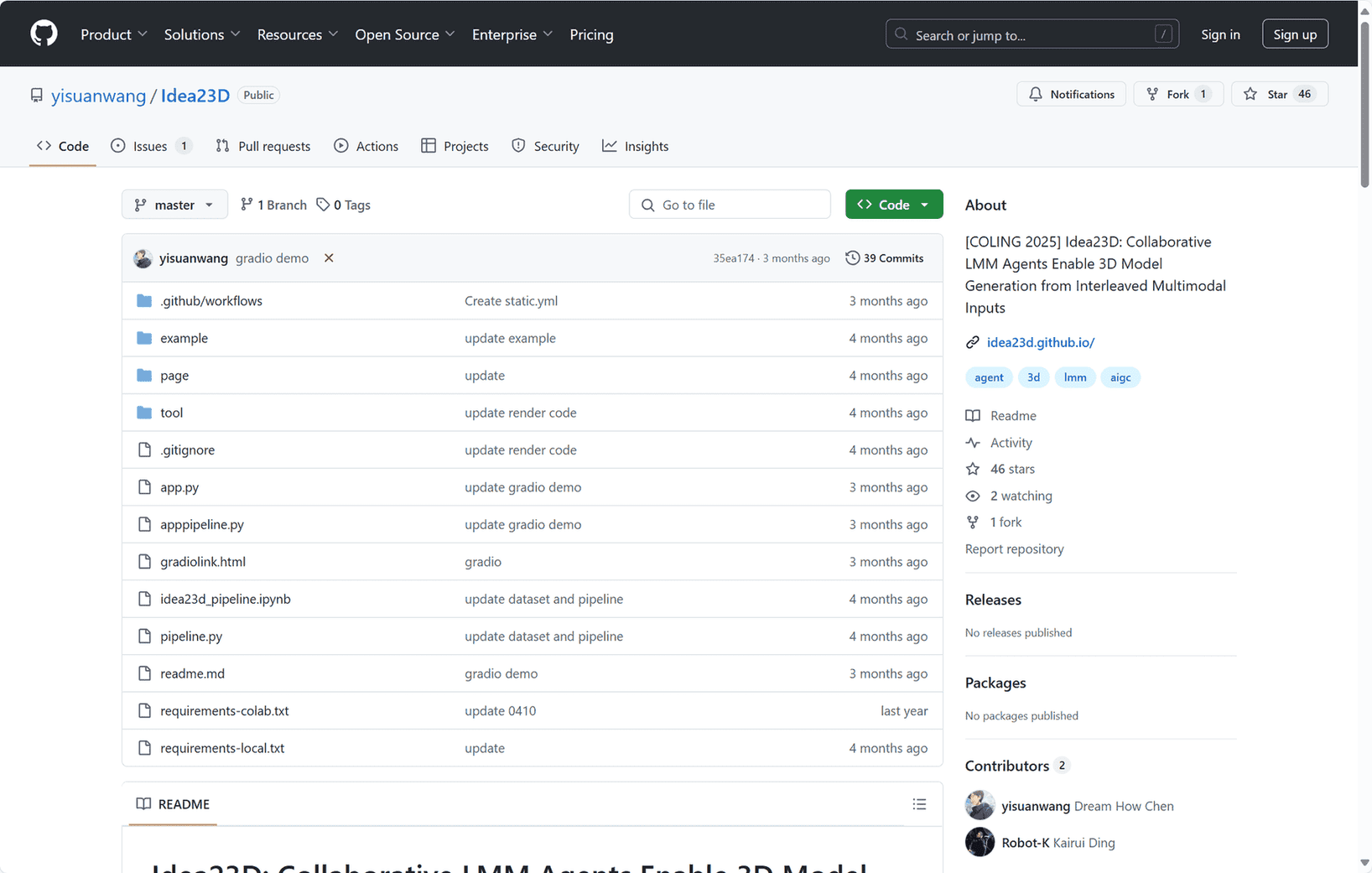

The project has been selected for presentation at the 2025 Conference on Computational Linguistics (COLING), which will take place in Abu Dhabi, UAE, in January 2025. This recognition highlights its significance in the field of computational linguistics and visual computing.

A demo of Idea23D is available through a Gradio interface, where users can interact with the system and experience how it converts multimodal inputs into detailed 3D models. The system allows for hands-on exploration of the design process, offering insight into how 3D outputs are refined over time.

The project is led by a team of researchers including Junhao Chen, Xiang Li, Xiaojun Ye, Chao Li, Zhaoxin Fan, and Hao Zhao. Their work represents a step forward in integrating language and visual inputs into a coherent 3D design workflow.

The source code and technical details are publicly available for those interested in exploring the system further or contributing to its development. Idea23D sets the stage for new applications in design, gaming, and virtual content creation.