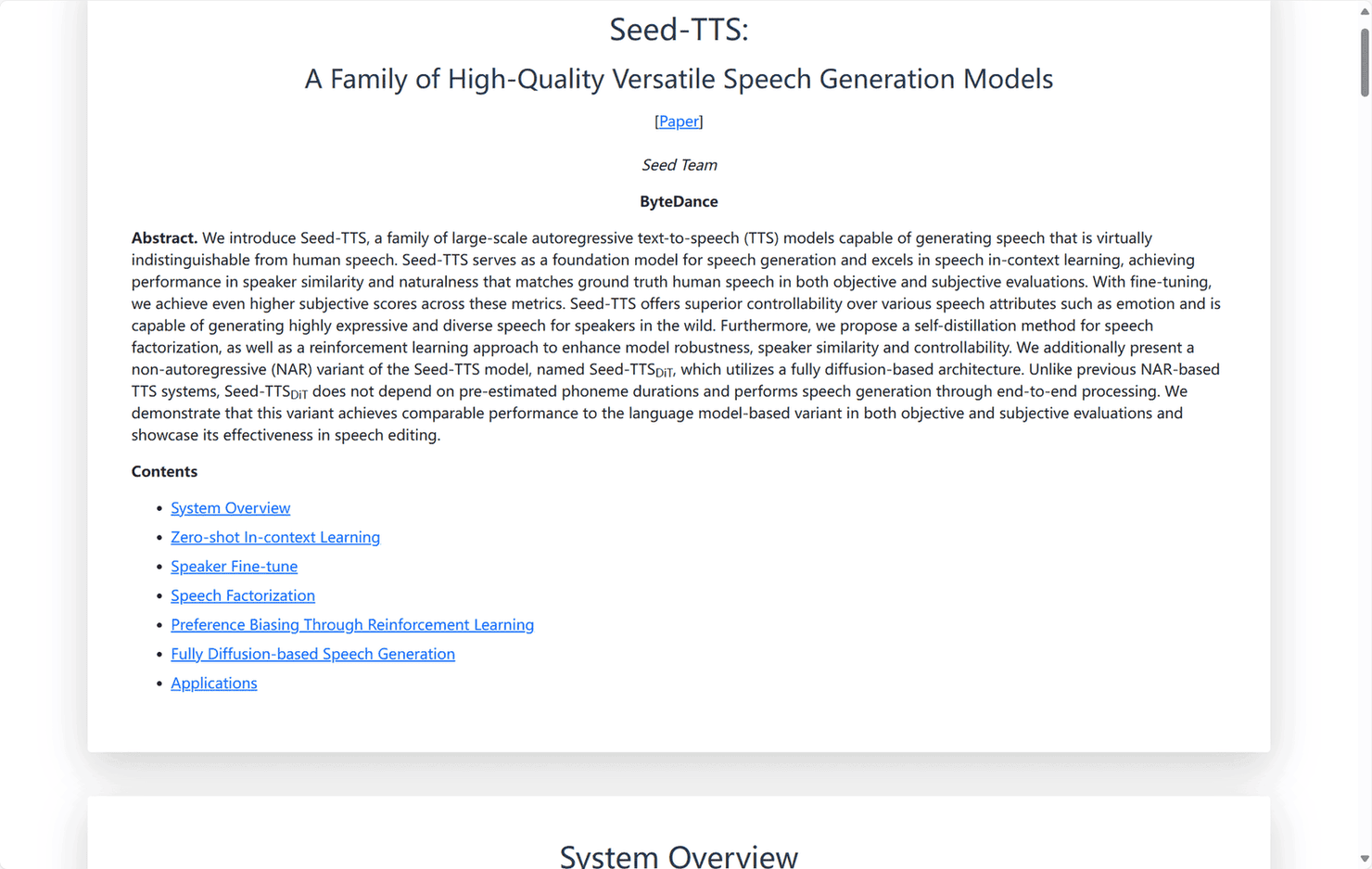

Seed-TTS

Seed-TTS is a high-quality, versatile speech generation model that can generate speech that is almost indistinguishable from human speech and supports features such as...

Tags:AI Audio ToolsByteDance Seed-TTS speech generation text-to-speech voice cloning

Seed-TTS is a collection of large-scale autoregressive text-to-speech (TTS) models developed by ByteDance, capable of producing speech that closely resembles human voice in both speaker similarity and naturalness. These models serve as foundational tools for speech generation, excelling in zero-shot in-context learning, which allows them to adapt to new speakers and emotions without additional training. Fine-tuning these models further enhances their performance, achieving higher subjective scores across various metrics.

Key Features:

-

Versatile Speech Generation: Seed-TTS models can generate expressive and diverse speech, capturing various speech attributes such as emotion, speaker identity, and style.

-

Speech Factorization: The models incorporate a self-distillation method for speech factorization, enhancing the separation of speech components and improving speech quality.

-

Reinforcement Learning Enhancements: A reinforcement learning approach is employed to improve model robustness, speaker similarity, and controllability, ensuring high-quality and natural-sounding speech output.

-

Non-Autoregressive Variant: Seed-TTS includes a non-autoregressive variant, Seed-TTS_{DiT}, which utilizes a fully diffusion-based architecture. This variant performs end-to-end speech generation without relying on pre-estimated phoneme durations, offering efficiency and flexibility in speech synthesis.

Applications:

Seed-TTS models have been successfully applied in various scenarios, including speech editing, voice cloning, and multilingual speech generation. Their ability to generate high-quality and natural-sounding speech makes them suitable for applications in virtual assistants, audiobooks, and interactive voice response systems.