SEED-Story – AI

SEED-Story is a multimodal long story generation tool that combines text and images to generate rich and coherent stories. It is suitable for story creation and conten...

Tags:AI writingContent Generation Long-form Narrative Multimodal Storytelling StoryStream Dataset Visual Consistency

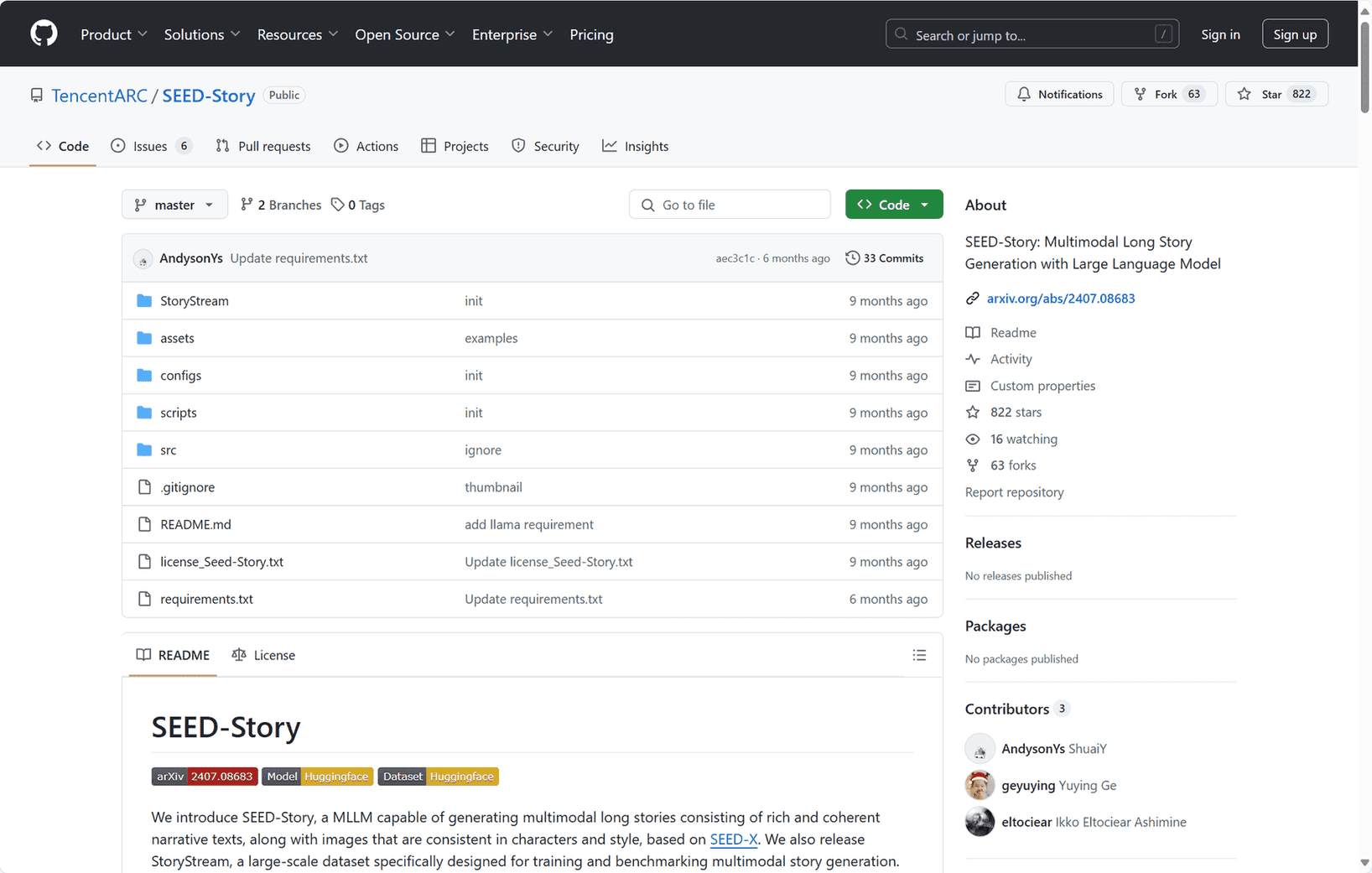

SEED-Story is a project developed by Tencent’s Applied Research Center (ARC) that focuses on generating long-form multimodal narratives. It integrates textual storytelling with corresponding images, ensuring consistency in characters and style throughout the narrative.

Key Features

-

Multimodal Story Generation: SEED-Story produces narratives that seamlessly combine text and images, maintaining coherence and visual consistency.

-

StoryStream Dataset: To support the development and evaluation of multimodal story generation, the project introduces StoryStream, a large-scale dataset comprising detailed narratives paired with high-resolution images.

Technical Approach

The system utilizes a Multimodal Large Language Model (MLLM) capable of predicting both text and visual tokens. These visual tokens are processed through a visual de-tokenizer to generate images that align with the narrative’s characters and style. Additionally, the model incorporates a multimodal attention mechanism, enabling the generation of stories with extensive sequences in an efficient autoregressive manner.

Applications and Implications

SEED-Story’s ability to generate coherent text-image narratives has potential applications in various fields, including entertainment, education, and content creation. By automating the production of illustrated stories, it offers a tool for creators to develop rich multimedia content efficiently.

In summary, SEED-Story represents a significant advancement in the integration of textual and visual storytelling, providing a framework for the creation of cohesive and engaging multimodal narratives.