MusiConGen

MusiConGen is a Transformer-based text-generated music model that can accurately control rhythm and chords, supports a variety of music styles, and is suitable for mus...

Tags:AI Audio Toolschord control MusiConGen rhythm control text-to-music Transformer model

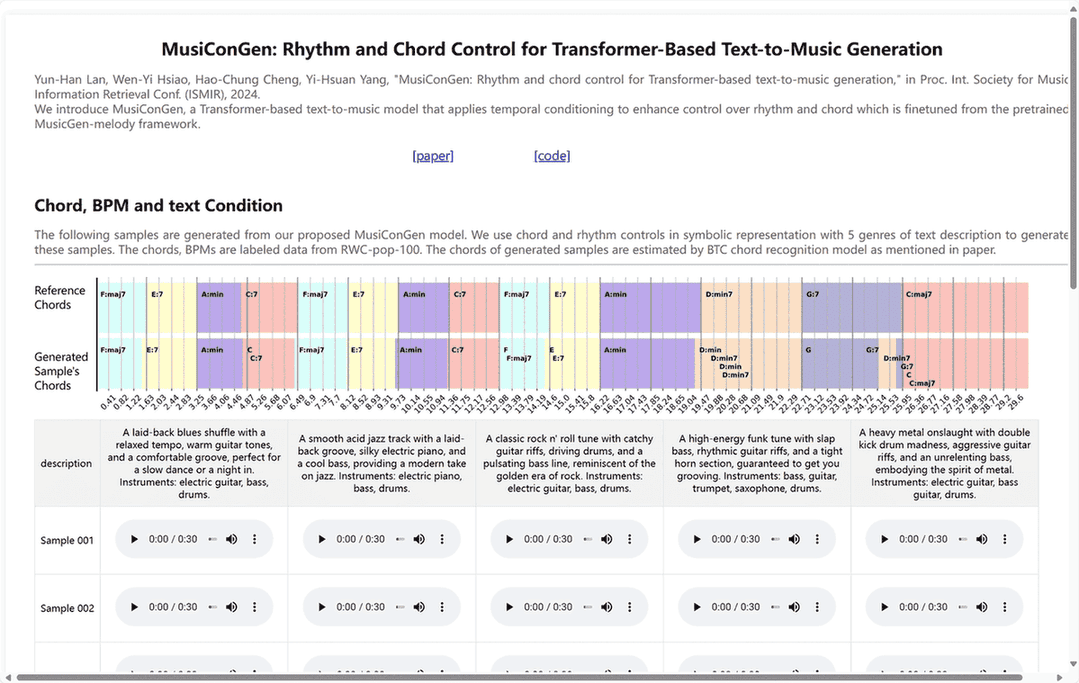

MusiConGen is a Transformer-based text-to-music generation model developed to enhance control over rhythm and chords in music creation. Building upon the pre-trained MusicGen-melody framework, MusiConGen introduces temporal conditioning mechanisms that allow users to define musical features such as chord sequences, beats per minute (BPM), and textual descriptions to generate diverse and expressive music samples.

Key Features:

-

Precise Control Over Musical Elements: MusiConGen enables users to specify chord progressions, tempo, and textual prompts, resulting in music that aligns closely with the desired specifications.

-

Diverse Musical Styles: The model supports the generation of music across various genres, including blues, acid jazz, rock, funk, and heavy metal, each with distinct chord and rhythm patterns.

-

Efficient Fine-Tuning: Utilizing a consumer-grade GPU-friendly fine-tuning mechanism, MusiConGen integrates automatically extracted rhythm and chord data as conditioning signals, enhancing the model’s adaptability and performance.

-

Open-Source Accessibility: The codebase, model checkpoints, and audio examples are publicly available, promoting transparency and enabling further research and development in the field of AI-driven music generation.

Applications:

MusiConGen serves as a valuable tool for musicians, composers, and content creators seeking to explore AI-assisted music composition. Its ability to generate music with specific rhythmic and harmonic characteristics makes it suitable for applications in film scoring, game audio design, and personalized music creation.